The General Data Protection Regulation (GDPR) is now in the home straight, with publication of final, final text expected in Q1 of 2016 (expect something to happen towards the end of January).

The General Data Protection Regulation (GDPR) is now in the home straight, with publication of final, final text expected in Q1 of 2016 (expect something to happen towards the end of January).

One of the small and subtle changes that is buried in the 209 pages of text in the most copy I have come into possession of is the apparent removal from the Regulation of any specific reference to personal liability of officers, directors or managers of bodies corporate where their actions (or inactions) cause an offence to be committed. This is a power that the Irish DPC has used judiciously over the past few years under current legislation (it is a power of the DPC under Section 29 of the Data Protection Acts and Section 25 of SI336 (ePrivacy Regulations), but which has served to focus the minds of managers and directors of recidivist offending companies when the sanction has been threatened or applied. The potential knock-on impact of such a personal prosecution can affect career prospects in certain sectors as parties found guilty of such offences may struggle to meet fitness and probity tests for roles in areas such as Financial Services.

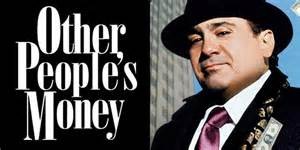

The omission of this power from the GDPR weakens the enforcement tools that a Regulator has available, weakens the ability for Regulators to influence the internal organisational ethic of a body corporate when it comes to personal data, and invites officers, directors, and managers (particularly in larger organisations) to engage in “Mental Discounting” because the worst case scenario that can occur is a loss of “Other People’s Money”, not a direct impact to them.

I’ve written about this before on this blog in the context of organisations in compliance contexts weighing up “worst case scenarios” and assessing if the financial or other penalties are greater than or lesser than the value derived from breaching rules (search for “mental discounting“). However, the absence of a personal risk to the personal money of officers, directors, or managers also creates a problem when we consider the psychology of risk, given that our risk assessment faculties are among the oldest parts of our brain:

- We are really bad at assessing abstract risk (we evolved to understand direct physical risks, not the risks associated with abstract and intangible concepts, like fundamental rights, data, and suchlike).

- We are tend to down play risks that are not personalised (if there isn’t a face to it, the risk remains too abstract for our primitive brain. This is also the difference between comedy and tragedy… comedy is somebody falling off a ladder. Tragedy is me stubbing my toe).

So, when faced with a decision about the processing of personal data that has a vague probability of a potentially significant, but more probably manageable, financial penalty to an abstract intangible entity (the company we work for), with no impact of any kind on a tangible and very personal entity (the individual making the decision), invariably people will decide to do the thing that they are measured against and that they are going to get their bonus or promotion based on.

The absence of an “individual accountability” provision in the GDPR means that decision makers will be gambling with Other People’s Data and Other People’s Money with no immediate risk of tangible sanction. If the internal ethic of a company culture is to take risks and ‘push the envelope’ with personal data, and that is what people are measured and rewarded on, that is what will be done.

In a whitepaper I co-authored with Katherine O’Keefe for Castlebridge, we discussed the role of legislation in influencing the internal organisational ethic. The potential for personal sanctions for acting contrary to the ethical standards expected by society creates a powerful lever for evolving risk identification, risk assessment, risk appetite, and balancing the internal ethic of the organisation against that of society. Even if only used judiciously and occasionally, it focuses the attention of management teams on data and data privacy as business critical issues that should matter to them. Because it may impact their personal bottom line.

Absent such a means of sanction for individuals, I fear we will see the evolution of a compliance model based around “fail, fail fast, reboot” where recidivist offender decision makers simply fold the companies that have been found to have committed an offence and restart with the same business model and ethic a few doors down, committing the same offences. Regulators lacking a powerful personal sanction will be unable to curtail such an approach.

After all, it’s just other people’s money when you get it wrong with other people’s data.